Let’s flow your Business Central data into Microsoft Fabric

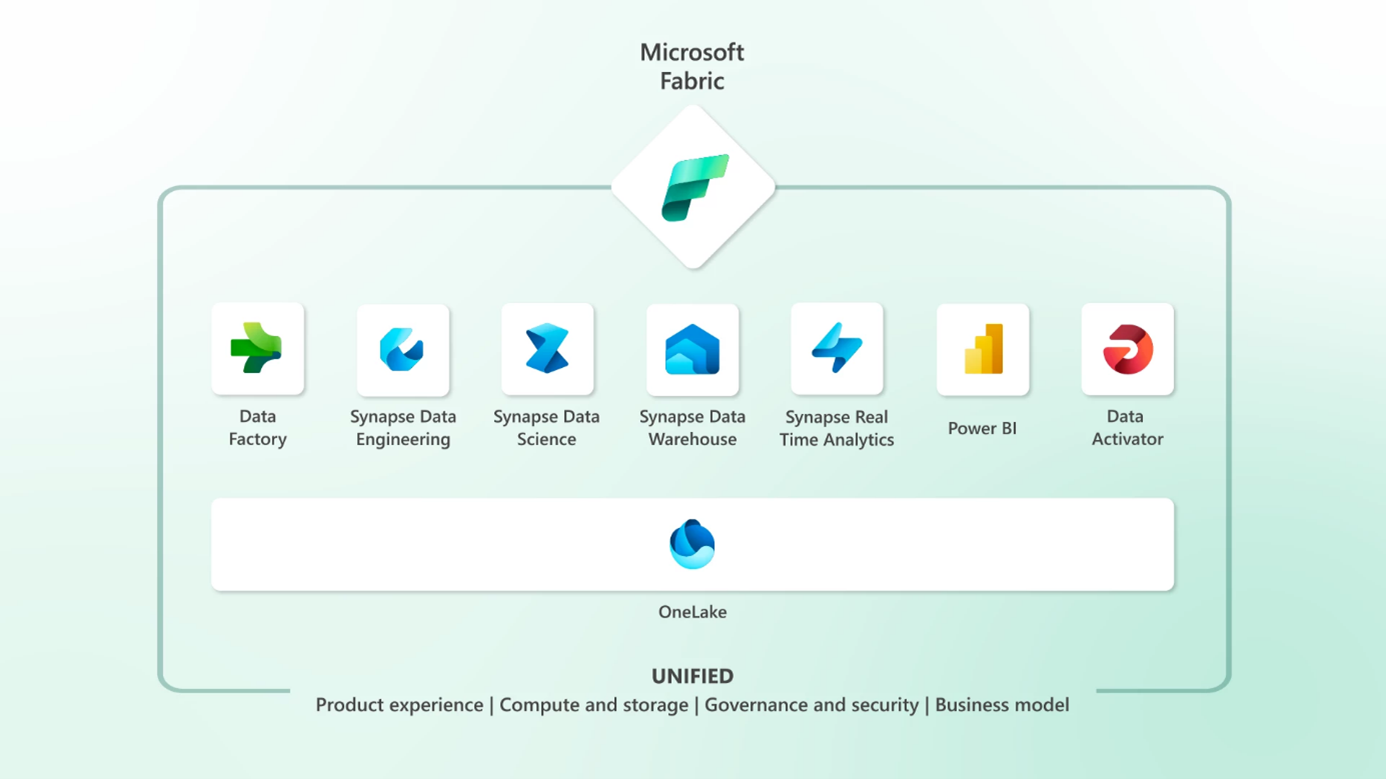

With Microsoft Fabric you have only one source for all your data. So you don’t have to move data to another data warehouse or data lake. Read more about Microsoft Fabric in this blog.

On 23th of May Microsoft announced Microsoft Fabric: Introducing Microsoft Fabric: The data platform for the era of AI | Azure Blog | Microsoft Azure.

With Microsoft Fabric you have only one source for all your data. So you don’t have to move data to another data warehouse or data lake. It is based on OneLake.

But also there is a seamless integration with the core workloads.

So for analysing and reporting data this is a huge advantage!

But can you also pull your Business Central data into OneLake?

Luckily we can! And therefore you have to create a Lakehouse inside Microsoft Fabric.

A Lakehouse is more or less a place where you can store unstructured data of any source in folders and files, databases and tables.

Microsoft has created a good overview of what the difference is to store data in Microsoft Fabric.

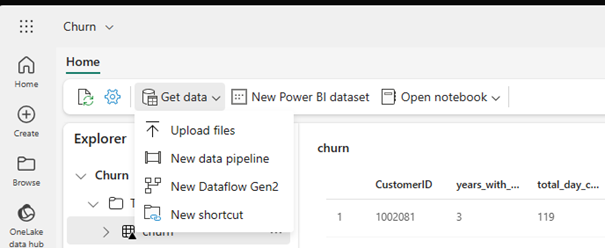

In a Lakehouse you can get data from several places.

For Business Central you have to get your data from a Dataflow Gen2:

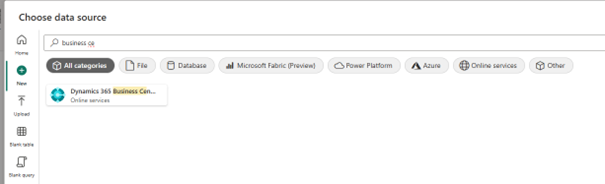

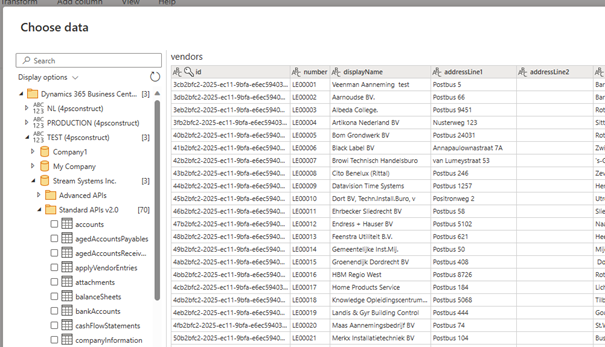

There you can choose from another source. And you can type in Business Central:

Then you will see the Business Central dataflow connector.

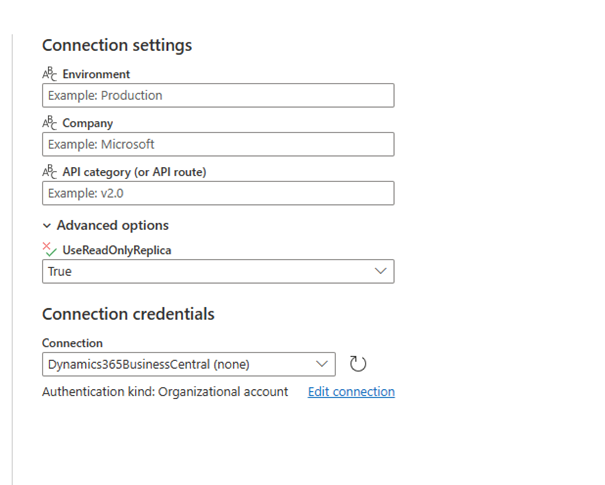

When you have clicked it you can fill in the “Environment”, “Company” and “API Category”.

But you can leave that empty and when you have verified the sign-in it will show you all the existing environments and companies inside Business Central with all the API’s.

NB: Please set the UseReadOnlyReplica to true by the advanced features!

Here you can choose which tables you want to have in your OneLake.

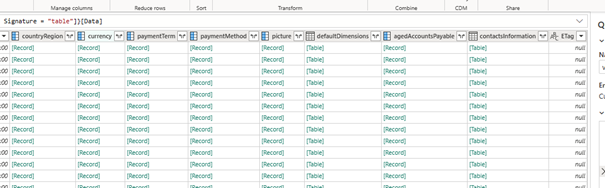

NB: sometimes you get an error that there are empty fields. Then you have to remove the extended columns in the table as shown in the picture below:

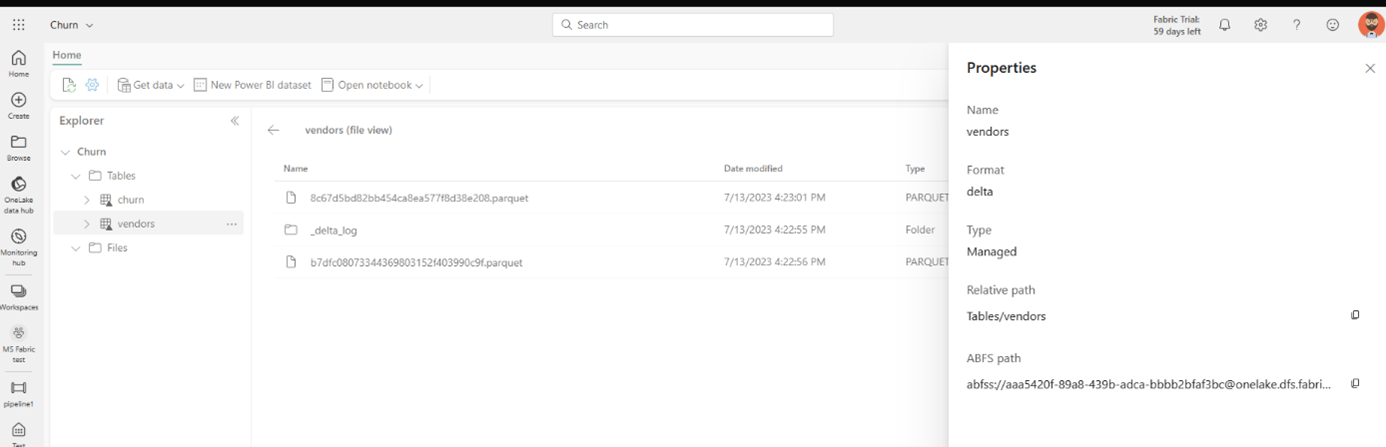

When you have published it you can set the refresh rate and you can see that all your data (in this case vendors) are stored in your OneLake:

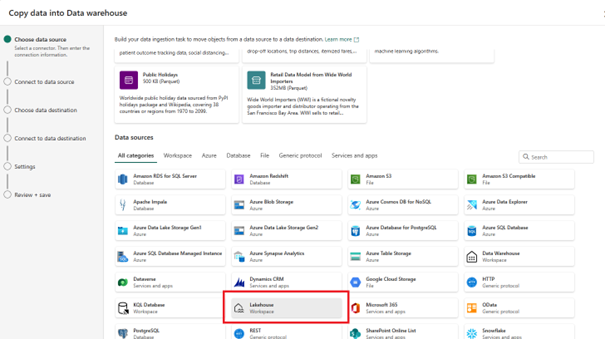

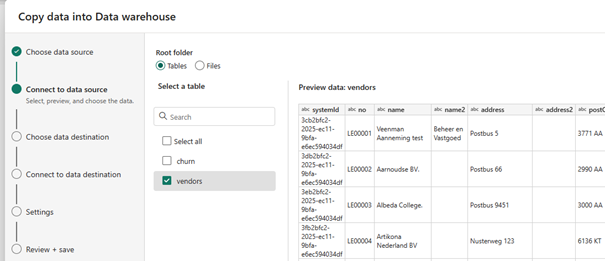

So now you can work with Notebook on your data or let your data flow in the warehouse through a pipeline:

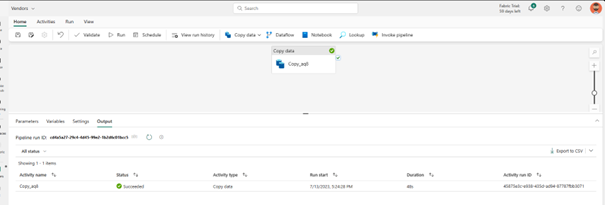

That will create a pipeline for you:

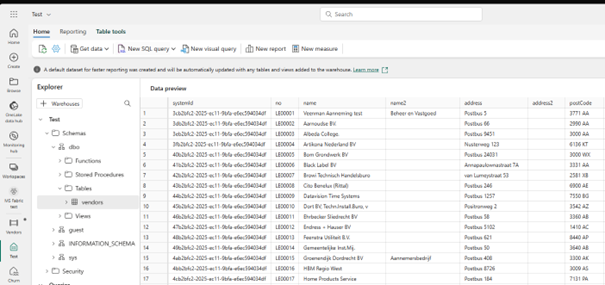

And that pipeline will import your lakehouse data into a structured table:

So in this case you store your BC data in a OneLake and can update it very frequently and analyze it from there without disturbing your Business Central Users!